Time: 2025-02-17 11:47:11View:

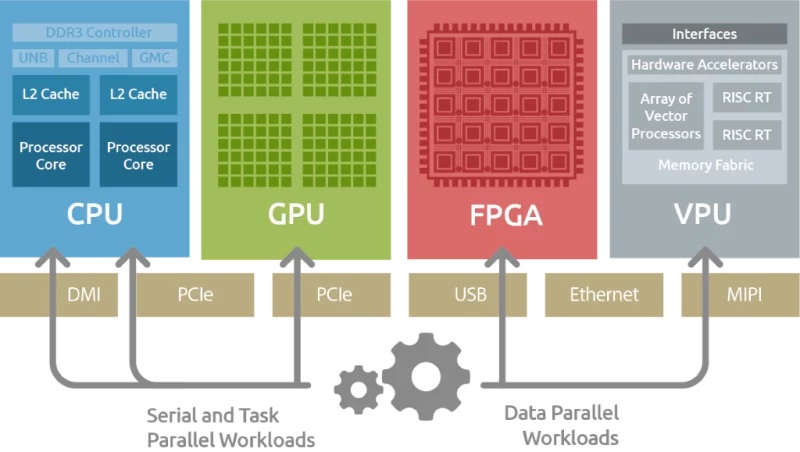

FPGAs (Field-Programmable Gate Arrays) and processors like CPUs, GPUs, APUs, DSPs, NPUs, and TPUs are all computing platforms, but they differ significantly in architecture, functionality, and use cases. Below is an explanation of their relationships and key differences:

Definition: An FPGA is a reconfigurable hardware device that consists of an array of programmable logic blocks and interconnects.

Key Features:

Highly flexible and reprogrammable.

Parallel processing capabilities.

Low-level hardware control.

Used for prototyping, acceleration, and custom hardware designs.

Use Cases: Digital signal processing, ASIC prototyping, real-time systems, and hardware acceleration.

Definition: A CPU is a general-purpose processor designed to execute a wide range of instructions sequentially.

Key Features:

Optimized for sequential processing.

High single-thread performance.

Versatile and programmable.

Use Cases: General-purpose computing, operating systems, and applications requiring complex decision-making.

Definition: A GPU is a specialized processor designed for parallel processing, originally for graphics rendering.

Key Features:

Massively parallel architecture (thousands of cores).

Optimized for data-parallel tasks.

High throughput for floating-point operations.

Use Cases: Graphics rendering, machine learning, scientific computing, and simulations.

Definition: An APU is a hybrid processor that combines a CPU and GPU on a single chip.

Key Features:

Integrates CPU and GPU capabilities.

Optimized for power efficiency and compact designs.

Use Cases: Consumer electronics, gaming consoles, and mobile devices.

Definition: A DSP is a specialized processor optimized for digital signal processing tasks.

Key Features:

Efficient for mathematical operations (e.g., FFT, filtering).

Low latency and deterministic performance.

Use Cases: Audio processing, telecommunications, and image processing.

Definition: An NPU is a specialized processor designed for accelerating neural network computations.

Key Features:

Optimized for matrix multiplications and AI workloads.

High energy efficiency for AI inference.

Use Cases: Machine learning inference, edge AI, and deep learning.

Definition: A TPU is a custom ASIC developed by Google for accelerating TensorFlow-based machine learning workloads.

Key Features:

Optimized for tensor operations.

High throughput for AI training and inference.

Use Cases: Large-scale machine learning, cloud-based AI.

FPGA vs. CPU: FPGAs are hardware-programmable and excel at parallel tasks, while CPUs are software-programmable and optimized for sequential tasks. FPGAs can offload specific tasks from CPUs to accelerate performance.

FPGA vs. GPU: Both are parallel processors, but GPUs are fixed-architecture and optimized for data-parallel tasks, while FPGAs are reconfigurable and can be tailored for specific algorithms.

FPGA vs. APU: APUs combine CPU and GPU capabilities for general-purpose and graphics tasks, while FPGAs are used for custom hardware acceleration and prototyping.

FPGA vs. DSP: DSPs are specialized for signal processing, while FPGAs can be programmed to perform DSP tasks with added flexibility and parallelism.

FPGA vs. NPU/TPU: NPUs and TPUs are specialized for AI workloads, while FPGAs can be programmed to accelerate AI tasks but with less efficiency compared to dedicated NPUs/TPUs.

| Feature | FPGA | CPU | GPU | APU | DSP | NPU | TPU |

|---|---|---|---|---|---|---|---|

| Architecture | Reconfigurable logic | General-purpose | Massively parallel | CPU + GPU integrated | Signal processing | Neural network-optimized | Tensor-optimized |

| Flexibility | High (reprogrammable) | High (software-based) | Medium (fixed architecture) | Medium (fixed architecture) | Low (specialized) | Low (specialized) | Low (specialized) |

| Parallelism | High (customizable) | Low (sequential) | Very high | Medium | Medium | High | Very high |

| Power Efficiency | Medium | Medium | Low to medium | High | High | Very high | Very high |

| Use Case | Custom hardware, acceleration | General-purpose tasks | Graphics, AI, HPC | Consumer electronics | Signal processing | AI inference | AI training/inference |

| Development Complexity | High (hardware design) | Low (software) | Medium (CUDA/OpenCL) | Low (software) | Medium (specialized) | Medium (AI frameworks) | Medium (AI frameworks) |

FPGA: Use for custom hardware acceleration, real-time systems, and prototyping.

CPU: Use for general-purpose computing, complex decision-making, and tasks requiring high single-thread performance.

GPU: Use for graphics rendering, machine learning, and data-parallel tasks.

APU: Use in power-efficient devices like laptops and gaming consoles.

DSP: Use for real-time signal processing tasks like audio and telecommunications.

NPU/TPU: Use for AI inference and training, especially in edge devices (NPU) or cloud environments (TPU).

FPGAs are unique in their reconfigurability and flexibility, making them ideal for custom hardware solutions and acceleration.

CPUs are the backbone of general-purpose computing.

GPUs excel in parallel processing for graphics and AI.

APUs combine CPU and GPU capabilities for compact, power-efficient designs.

DSPs are specialized for signal processing.

NPUs/TPUs are optimized for AI workloads, with TPUs being particularly powerful for large-scale machine learning.

The choice between these technologies depends on the specific application, performance requirements, and development constraints.