Time: 2025-03-19 11:52:06View:

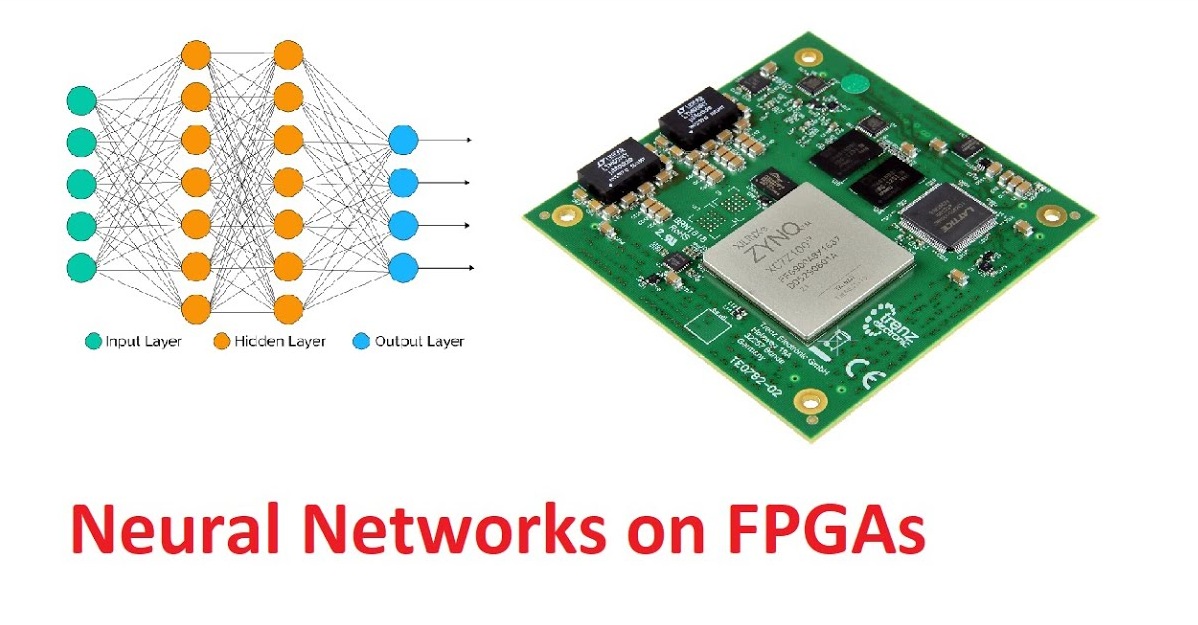

Deploying neural network models on Field-Programmable Gate Arrays (FPGAs) is a powerful way to achieve high-performance, low-latency, and energy-efficient inference. FPGAs are reconfigurable hardware platforms that can be tailored to specific neural network architectures. Below is a step-by-step guide to deploying neural network models on FPGAs:

Model Complexity: Determine the size and complexity of your neural network (e.g., CNN, RNN, Transformer).

Performance Needs: Identify latency, throughput, and power consumption requirements.

FPGA Resources: Understand the FPGA's available resources (e.g., DSP slices, BRAM, LUTs) and ensure they match your model's needs.

Deep Learning Frameworks: Use frameworks like TensorFlow, PyTorch, or ONNX to design and train your neural network.

FPGA-Specific Tools:

Xilinx: Vitis AI, Vivado, and HLS (High-Level Synthesis).

Intel FPGA: OpenVINO, Intel Quartus, and Intel FPGA SDK for OpenCL.

Lattice Semiconductor: Lattice Propel or Lattice sensAI.

Open-Source Tools: Apache TVM, FINN (for Xilinx FPGAs), or HLS4ML (for high-energy physics applications).

Quantization: Convert floating-point weights and activations to fixed-point (e.g., INT8) to reduce resource usage and improve efficiency.

Pruning: Remove redundant neurons or weights to reduce model size.

Model Compression: Use techniques like weight sharing or distillation to simplify the model.

Layer Fusion: Combine multiple layers (e.g., convolution and activation) to reduce memory bandwidth and latency.

Export the Model: Export the trained model to a format compatible with FPGA tools (e.g., ONNX, TensorFlow Lite, or Caffe).

Use Framework-Specific Converters:

For Xilinx: Use Vitis AI's model optimizer to convert TensorFlow/PyTorch models to DPU (Deep Learning Processing Unit) compatible formats.

For Intel: Use OpenVINO's Model Optimizer to convert models to Intermediate Representation (IR).

Custom Hardware Description: For advanced users, manually describe the model in HDL (VHDL/Verilog) or HLS (C/C++).

Parallelism: Exploit FPGA's parallel processing capabilities by designing custom pipelines for neural network layers.

Memory Optimization: Use BRAM (Block RAM) efficiently to store weights and activations.

Dataflow: Optimize data movement between layers to minimize latency and maximize throughput.

Custom IP Cores: Use pre-built IP cores for common operations (e.g., convolution, pooling) or design custom ones.

High-Level Synthesis (HLS): Use HLS tools to convert high-level code (C/C++) into RTL (Register Transfer Level) for FPGA.

RTL Design: Write or generate Verilog/VHDL code for the neural network.

Synthesis and Place-and-Route: Use FPGA vendor tools (e.g., Vivado for Xilinx, Quartus for Intel) to synthesize the design and map it to the FPGA fabric.

Simulation: Test the design using simulation tools to ensure functional correctness.

Hardware Emulation: Use FPGA emulation platforms to validate performance and resource usage.

Benchmarking: Measure latency, throughput, and power consumption on the actual FPGA hardware.

Bitstream Generation: Generate the bitstream file that configures the FPGA.

Load the Bitstream: Program the FPGA with the bitstream using a JTAG interface or other methods.

Run Inference: Deploy the neural network on the FPGA and perform inference on real-world data.

Profile Performance: Identify bottlenecks in latency, throughput, or resource usage.

Refine the Model: Further optimize the model or FPGA design based on profiling results.

Update the Deployment: Reprogram the FPGA with the updated design.

Xilinx Vitis AI: A comprehensive toolkit for deploying AI models on Xilinx FPGAs.

Intel OpenVINO: Optimized for Intel FPGAs and CPUs.

Apache TVM: An open-source machine learning compiler stack that supports FPGA backends.

FINN: A framework from Xilinx Research for building quantized neural networks on FPGAs.

HLS4ML: A tool for translating machine learning models into FPGA firmware using HLS.

1. Train a model in TensorFlow/PyTorch.

2. Quantize the model using Vitis AI's quantizer.

3. Compile the model using Vitis AI compiler to generate DPU instructions.

4. Design the FPGA hardware using Vivado or Vitis.

5. Program the FPGA and deploy the model.

By following these steps, you can successfully deploy neural network models on FPGAs, leveraging their reconfigurability and efficiency for edge computing, real-time processing, and other demanding applications.