Time: 2024-01-16 15:21:02View:

FPGA stands for Field-Programmable Gate Array. It is an integrated circuit that can be reconfigured or programmed after manufacturing. FPGAs contain an array of programmable logic blocks and interconnects that can be configured by the user to perform specific tasks or functions. This flexibility makes FPGAs highly versatile and suitable for a wide range of applications, including digital signal processing, telecommunications, automotive, aerospace, and many others.

One of the key advantages of FPGAs is their ability to be customized for specific tasks, allowing for rapid prototyping and development of specialized hardware. This makes them particularly useful in situations where the requirements may change frequently or where time-to-market is critical. Additionally, FPGAs can be reprogrammed multiple times, making them ideal for applications that require flexibility and upgradability.

FPGAs are often used in scenarios where performance and power efficiency are crucial. Their parallel processing capabilities and ability to implement complex algorithms in hardware make them well-suited for tasks such as real-time processing, high-speed data acquisition, and image and signal processing. Furthermore, FPGAs can be used to accelerate specific functions or algorithms, providing significant performance improvements over traditional software-based solutions.

In recent years, FPGAs have gained popularity in emerging technologies such as artificial intelligence and machine learning. Their ability to efficiently implement custom neural network architectures and accelerate specific AI workloads has made them a compelling choice for accelerating AI inference tasks. As the demand for specialized hardware accelerators continues to grow, FPGAs are expected to play an increasingly important role in the development of AI and other advanced technologies.

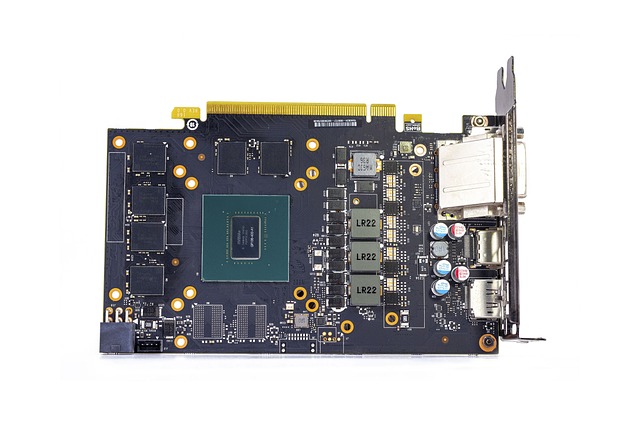

A GPU, or Graphics Processing Unit, is a specialized electronic circuit designed to rapidly manipulate and alter memory to accelerate the creation of images in a frame buffer intended for output to a display device. Originally developed for rendering high-quality graphics in video games, GPUs have evolved to become essential components in a wide range of applications, including scientific simulations, artificial intelligence, cryptocurrency mining, and more.

One of the defining features of GPUs is their parallel processing capability, which allows them to handle multiple tasks simultaneously. This makes them well-suited for computationally intensive workloads that can be broken down into smaller, independent tasks, such as rendering 3D graphics, training neural networks, or performing complex mathematical calculations. As a result, GPUs have become indispensable in fields such as machine learning, where they are used to accelerate the training and inference of deep learning models.

In addition to their parallel processing power, modern GPUs are equipped with a large number of cores, or processing units, which enable them to execute multiple operations in parallel. This makes them highly efficient at handling tasks that can be parallelized, such as matrix operations, image processing, and scientific simulations. Furthermore, the development of specialized programming frameworks, such as CUDA and OpenCL, has made it easier for developers to harness the full computational potential of GPUs for a wide range of applications.

The use of GPUs in high-performance computing has also led to the emergence of GPU-accelerated computing, where GPUs are used to offload specific computational tasks from the CPU, resulting in significant performance improvements. This approach has been widely adopted in scientific research, data analytics, and other fields where complex computations are required. As the demand for high-performance computing continues to grow, GPUs are expected to play an increasingly important role in driving advancements in technology and enabling new applications that require massive computational power.

FPGAs (Field-Programmable Gate Arrays) and GPUs (Graphics Processing Units) are both specialized hardware accelerators that offer unique advantages for different types of computational tasks. Understanding the differences between the two can help in choosing the right technology for a specific application.

FPGAs are highly flexible and can be reconfigured to implement custom logic circuits, making them suitable for a wide range of applications that require specialized hardware acceleration. Unlike GPUs, which are optimized for parallel processing of large data sets, FPGAs can be tailored to perform specific tasks with minimal power consumption and low latency. This makes them ideal for applications that require real-time processing, such as signal processing, telecommunications, and industrial automation. Additionally, FPGAs are well-suited for prototyping and rapid development of custom hardware solutions, as they can be reprogrammed multiple times to adapt to changing requirements.

On the other hand, GPUs are designed for massively parallel processing and excel at handling large-scale data-parallel computations, such as those found in graphics rendering, scientific simulations, and machine learning. With a large number of cores and high memory bandwidth, GPUs can efficiently execute thousands of threads simultaneously, making them well-suited for tasks that can be parallelized, such as matrix operations, image processing, and deep learning. As a result, GPUs have become indispensable in fields such as artificial intelligence, where they are used to accelerate the training and inference of complex neural network models.

When comparing FPGAs and GPUs, it's important to consider the trade-offs between flexibility, performance, and power efficiency. FPGAs offer unparalleled flexibility and low latency for specific tasks, but they may require more effort to program and optimize for performance. GPUs, on the other hand, provide high throughput and are relatively easier to program using standard programming frameworks, but they may not offer the same level of customization and low latency as FPGAs for certain applications.

In some cases, a combination of FPGAs and GPUs may be used to leverage the strengths of both technologies. For example, FPGAs can be used to offload specific computational tasks that require low latency and custom hardware acceleration, while GPUs can handle the bulk of parallelizable computations, resulting in a balanced and efficient system for a wide range of applications. As technology continues to advance, the synergy between FPGAs and GPUs is expected to play a crucial role in driving innovation and enabling new applications that require diverse computational capabilities.

The applications of GPUs (Graphics Processing Units) have expanded significantly beyond their original purpose of rendering high-quality graphics in video games. Today, GPUs are essential components in a wide range of fields, driving advancements in scientific research, artificial intelligence, data analytics, cryptocurrency mining, and more.

In the realm of scientific research, GPUs are used to accelerate complex simulations and calculations in fields such as physics, chemistry, and biology. Their parallel processing power and high memory bandwidth make them well-suited for handling large-scale computational tasks, enabling researchers to perform simulations and analyses that were previously impractical or time-consuming.

In the field of artificial intelligence (AI), GPUs play a pivotal role in training and deploying deep learning models. The parallel processing capabilities of GPUs allow for the efficient execution of the matrix and vector operations that underpin neural network training, significantly reducing the time required to train complex models. Furthermore, GPUs are used for AI inference, enabling real-time processing of data for applications such as image recognition, natural language processing, and autonomous vehicles.

Data analytics and machine learning also benefit from the parallel processing power of GPUs. Tasks such as data preprocessing, feature extraction, and model training can be accelerated using GPUs, leading to faster insights and more efficient data-driven decision-making. Additionally, GPUs are used in recommendation systems, fraud detection, and other data-intensive applications that require rapid processing of large datasets.

Cryptocurrency mining is another prominent application of GPUs. Their ability to perform many parallel computations simultaneously makes them well-suited for the hashing algorithms used in cryptocurrency mining, such as those employed in Bitcoin and Ethereum. As a result, GPUs have become essential hardware for individuals and organizations engaged in cryptocurrency mining operations.

Furthermore, GPUs are used in medical imaging, weather forecasting, computational fluid dynamics, and a wide range of other scientific and engineering applications that require high-performance computing. As technology continues to advance, the applications of GPUs are expected to expand further, driving innovation and enabling new possibilities in diverse fields.