Time: 2025-02-08 11:33:12View:

Embedded image processing using Field-Programmable Gate Arrays (FPGAs) is a powerful approach for real-time, high-performance applications. FPGAs are highly parallel and reconfigurable, making them ideal for tasks like image filtering, object detection, and computer vision. Below is a detailed guide to implementing embedded image processing using FPGAs.

Parallel Processing: FPGAs can process multiple pixels or operations simultaneously.

Low Latency: Real-time processing with minimal delay.

Customizability: Hardware can be tailored to specific algorithms.

Energy Efficiency: Lower power consumption compared to GPUs for certain tasks.

Determine the specific image processing task, such as:

Image Filtering: Gaussian blur, edge detection (e.g., Sobel, Canny).

Feature Extraction: Corner detection, Hough transform.

Object Detection: Haar cascades, convolutional neural networks (CNNs).

Image Enhancement: Histogram equalization, noise reduction.

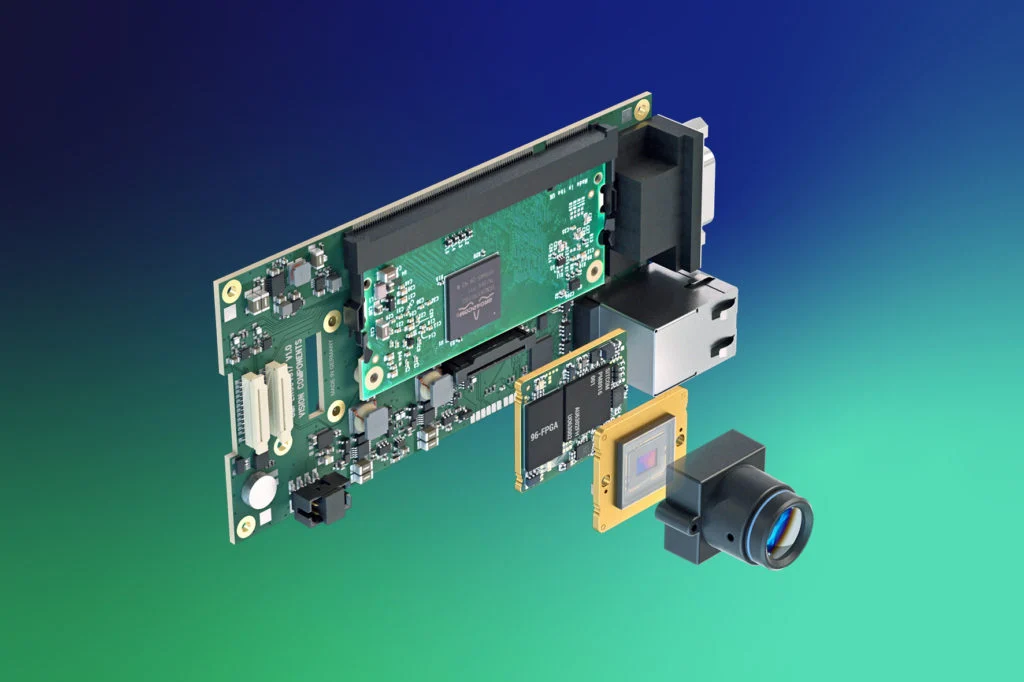

Choose an FPGA development board based on your requirements:

Entry-Level: Xilinx Spartan, Intel Cyclone.

Mid-Range: Xilinx Artix, Intel Arria.

High-End: Xilinx Virtex, Intel Stratix.

Popular development boards include:

Xilinx Zynq-7000: Combines FPGA fabric with ARM processors.

Intel DE10-Nano: FPGA with an ARM Cortex-A9.

Altera Cyclone V: Affordable and versatile.

Break down the image processing task into stages:

Image Acquisition: Capture images using a camera (e.g., CMOS or CCD).

Preprocessing: Convert raw data (e.g., Bayer filter to RGB), resize, or normalize.

Processing: Apply algorithms like convolution, thresholding, or feature extraction.

Postprocessing: Display or store the results.

Use Hardware Description Languages (HDLs) like VHDL or Verilog to design the FPGA logic. Key components include:

Image Buffer: Store incoming image data (e.g., using Block RAM).

Processing Units: Implement parallel pipelines for pixel operations.

Control Logic: Manage data flow and synchronization.

Example: Implementing a 3x3 Sobel edge detection filter:

module sobel_filter ( input clk, input [7:0] pixel_in, output reg [7:0] pixel_out); // Define 3x3 kernel registers reg [7:0] kernel [0:2][0:2]; // Convolution logic always @(posedge clk) begin // Shift pixels into the kernel kernel[0][0] <= kernel[0][1]; kernel[0][1] <= kernel[0][2]; kernel[0][2] <= pixel_in; // Repeat for other rows... // Apply Sobel operators (Gx and Gy) integer Gx, Gy; Gx = (kernel[0][0]*-1) + (kernel[0][2]*1) + (kernel[1][0]*-2) + (kernel[1][2]*2) + (kernel[2][0]*-1) + (kernel[2][2]*1); Gy = (kernel[0][0]*-1) + (kernel[0][1]*-2) + (kernel[0][2]*-1) + (kernel[2][0]*1) + (kernel[2][1]*2) + (kernel[2][2]*1); // Calculate gradient magnitude pixel_out <= (Gx > 127 || Gy > 127) ? 255 : 0; endendmodule

For faster development, use tools like Xilinx Vivado HLS or Intel Quartus Prime to convert C/C++ algorithms into HDL code. Example:

#include "hls_video.h"void sobel_filter(HLS_STREAM_8& src, HLS_STREAM_8& dst) {

#pragma HLS INTERFACE axis port=src

#pragma HLS INTERFACE axis port=dst

#pragma HLS PIPELINE

// Sobel filter implementation in C++

// ...}Camera Interface: Use protocols like MIPI CSI-2 or parallel interfaces.

Display Interface: Connect to HDMI, VGA, or LCD displays.

Memory: Use DDR SDRAM for storing large images.

Pipelining: Process multiple stages simultaneously.

Parallelism: Use multiple processing units for different image regions.

Resource Sharing: Reuse hardware blocks to save logic elements.

Use simulation tools like ModelSim or Xilinx Vivado Simulator.

Verify functionality with test images.

Debug using onboard LEDs, logic analyzers, or integrated debuggers.

1. Real-Time Edge Detection:

Capture video from a camera.

Apply Sobel or Canny edge detection.

Display the processed video on a monitor.

2. Object Tracking:

Use background subtraction or optical flow.

Track moving objects in a video stream.

3. Convolutional Neural Networks (CNNs):

Implement lightweight CNNs for object recognition.

Use frameworks like Xilinx Vitis AI or Intel OpenVINO.

Complexity: Designing and debugging FPGA logic can be challenging.

Resource Constraints: Limited logic elements, memory, and DSP blocks.

Latency: Balancing throughput and latency in real-time systems.

Toolchain Learning Curve: Mastering FPGA development tools takes time.

Embedded image processing using FPGAs offers unparalleled performance and flexibility for real-time applications. By leveraging the parallel processing capabilities of FPGAs, you can implement efficient and high-speed image processing systems. With the right tools and techniques, FPGAs can be a game-changer for applications like computer vision, robotics, and medical imaging.